Preface

With the growing demand for cloud-native architecture, microservice-based architecture has become the popular choice for developing distributed architecture. Microservice helps in developing the services with bounded context. This implies that our architecture will be composed of a collection of polyglot services each focusing on a distinct business functionality. This helps in making architecture more reliable, scalable and enables the deployment of new features quickly.

Like other architectural approaches, microservices architecture involves trade-offs, and one of these trade-offs relates to the communication between microservices. In a monolithic architecture, communication occurs through function calls within a single process, which is simple and does not introduce any additional overhead. However, in microservices, communication takes place over the network, which introduces more complexity to the overall architecture.

Microservices often require secure, reliable, and fast communication across the network. To achieve this, each microservice needs to incorporate logic to address these communication concerns. Unfortunately, this logic must be replicated in every microservice within the architecture. As a result, any future enhancements or upgrades to the communication logic will necessitate modifications across all microservices, leading to redundancy, increased costs, and longer upgrade times.

To tackle these challenges of redundancy and complexity, a service mesh can be employed in a distributed architecture. By implementing a service mesh, you can centralize and abstract the communication logic from individual microservices. The service mesh acts as a dedicated layer that handles communication-related tasks, such as encryption, authentication, load balancing, and monitoring.

What is a Service Mesh?

A service mesh is a dedicated infrastructure layer that facilitates communication between various microservices. This layer can be seamlessly integrated into microservices as a sidecar container, offering a range of capabilities including authentication, traffic management, tracing, load balancing, and more. By adopting a service mesh, it becomes effortless to separate the business logic from the network logic.

By plugging the service mesh into a sidecar container, each microservice can leverage the features and functionality provided by the service mesh. This enables the decoupling of concerns, allowing the microservice to focus solely on its core business logic while offloading network-related tasks to the service mesh. This clear separation ensures that the network logic, such as handling secure communication and managing traffic, remains centralized and can be managed independently.

The service mesh acts as a transparent intermediary between microservices, intercepting and managing communication between them. It handles tasks like authentication to ensure secure interactions, traffic management to control the routing and distribution of requests, tracing for monitoring and debugging purposes, and load balancing for efficient resource utilization. These capabilities are inherent to the service mesh infrastructure and can be easily utilized by integrating the sidecar container into microservices.

Before diving into the implementation of the service mesh, let’s first explore the concept of the sidecar pattern. the sidecar pattern is a design pattern where an additional container, called a sidecar container, is attached to the main container of an application. This sidecar container runs alongside the main application container and provides additional functionalities and services that support or enhance the main container’s behavior.

Sidecar Pattern

A motorcycle sidecar is added to enhance the capability of the motorcycle, similarly in containers, sidecars are added to the main container to enhance the capability of the main container. A sidecar is used to implement the cross-cutting concern which can be attached to the main container. Sidecar helps to remove the redundant code from the main container into another process whose lifespan is similar to the main container.

Service mesh is usually added as the sidecar to each microservices, this helps to reduce the complexity by decoupling the business logic and network logic in microservices. Also in the future, any enhancement in the network layer will not require any changes in the main container, hence reducing the complexity.

The sidecar container, acting as part of the service mesh, can provide a wide range of features, including:

- Traffic management: The sidecar container can handle routing, load balancing, and traffic shaping to ensure optimal distribution of requests among microservices.

- Service discovery: It can assist in dynamically discovering and registering microservices within the service mesh, allowing for seamless communication between them.

- Security and authentication: The sidecar container can handle encryption, authentication, and authorization, ensuring secure communication between microservices.

- Observability and monitoring: It can facilitate tracing, logging, and metrics collection, providing insights into the behavior and performance of microservices.

Different Layers of Service Mesh

Data layer:

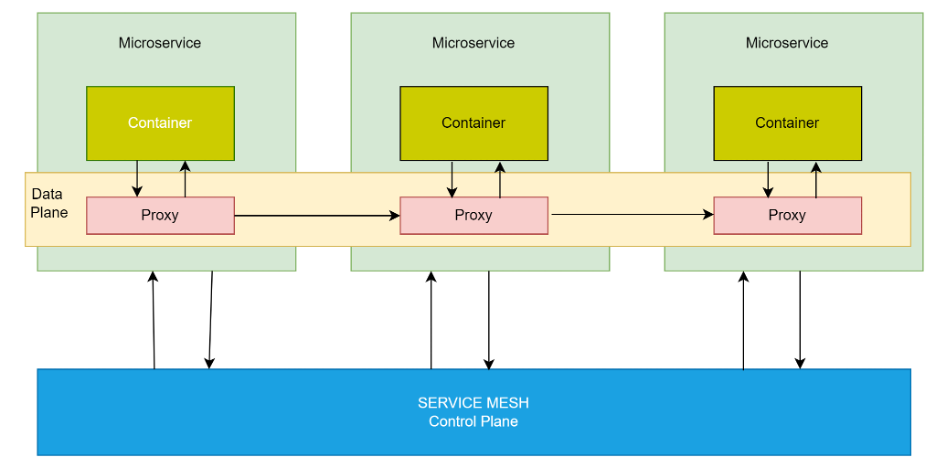

In service mesh, the data plane comprises proxies that are deployed as a sidecar with the main microservices. These proxies intercept the data coming to and from the main container/microservice. These proxies are then used to apply cross-cutting concerns such as rate limiter, service discovery, authentication, tracing, etc.

Control Plane:

In a service mesh, the control plane is a centralized location to manage all the proxies that are deployed in the data plane. All proxies need to register themself in the control plane and get the required configuration. Control planes provide the API to configure the proxies as per the use cases.

CLI:

A service mesh provides the CLI tools that will be used by the user to log in to the service mesh cluster and can diagnose or view the status of proxies and rules.

Benefits of Applying Service Mesh

- Decoupling: By separating the service mesh logic into sidecar proxies, it decouples the complexities and dependency of network communication from the business logic.

- Language and Framework Agnostic: By utilizing the flavor of sidecar proxies, it can be injected into any microservice irrespective of its tech stack. This allows the integration to be simplified and requires only a little effort.

- Reliable: By separating the fault tolerance from the primary container, the service can focus on implementing the business logic and service mesh implement retry logic, circuit breaker, and timeout mechanism. With this, your system will be more reliable and responsive.

- Ease to upgrade: Technically service mesh is a separate container within the same pod, so any upgrade doesn’t impact the service mesh. Similarly upgrading the service mesh doesn’t need the main container to be upgraded.

Common Challenges with Service Mesh

Integrating a service mesh into your architecture is not without its challenges, and it’s important to carefully consider these challenges before adopting it, as it is not a simple solution.

- Complexity: Service mesh adds another layer while communicating between microservices, this requires extra management and adds additional cost to the overall architecture.

- Debugging: Adding an additional layer to microservices can compound the already significant challenge of debugging, making it even more difficult for developers.

- Performance Overhead: All traffic from and to the microservice will be intercepted by the service proxies, this will definitely introduce performance overhead. Additional layers will add to the latency and consume network bandwidth.

- Consumption of extra resources: Service proxy will consume more resources such as memory, and CPU for doing its task. This cost will go linear as we add more microservices to our architecture.

- Learning curve: Service mesh has its own concepts, configuration, and rules. Implementing and managing service mesh into your architecture will require your development and operation team to have proper training.

Conclusion

A service mesh provides the mechanism that has gained popularity in the containerized ecosystem. It has provided a dedicated infrastructure layer for service-to-service communication within the containerized environment. Service mesh is deployed as a sidecar container and provides cross-cutting concerns such as circuit breaker, authentication, service discovery, logging, tracing, etc.

To conclude, service mesh simplifies and abstracts service communication, making it an ideal choice for applications with a large number of services. By separating the service mesh logic, managing and upgrading service communication becomes easier with minimal impact on the business application. However, it’s crucial to analyze the challenges and complexities that service mesh introduces before integrating it into your architecture. Careful consideration should be given to ensure that the benefits outweigh the potential drawbacks and that the service mesh aligns effectively with your specific application requirements.